Hosthatch Tokyo - 70-80% packet loss

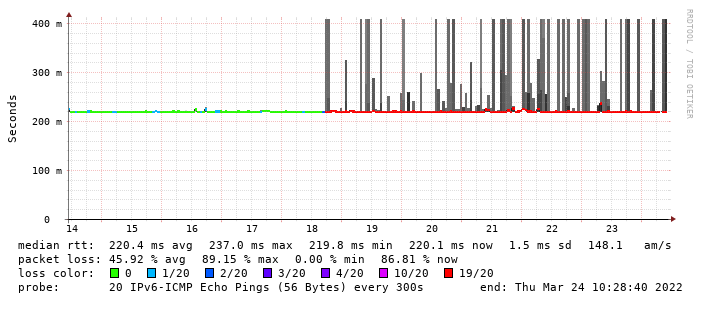

I'm seeing about 70-80% packet loss towards my VPS with Hosthatch in Tokyo for almost a week. They told me they were looking into it but so far nothing's changed and I also haven't received any update from them yet.

This makes the server unusable. Does anyone experience similar issues and/or have any hint or solution?

Host Loss% Snt Last Avg Best Wrst StDev 1. AS34081 2a0c:8fc1:6400::1 0.0% 139 0.3 0.3 0.3 3.8 0.4 2. AS??? 2a02:6ea0:8:1200:5500::5 0.0% 139 0.3 0.3 0.2 0.8 0.0 3. AS??? m247-tyo.cdn77.com (2a02:6ea0:2:1200:3490::2) 0.0% 138 0.4 0.7 0.3 13.1 1.4 4. AS9009 2001:ac8:10:10:185:94:195:15 0.0% 138 4.4 6.1 0.6 67.7 9.2 5. AS9009 vlan2922.as09.tyo1.jp.m247.com (2001:ac8:10:10:193:27:15:143) 0.0% 138 0.7 1.0 0.6 19.3 1.9 6. AS63473 2406:ef80:4::1 72.5% 138 0.6 0.6 0.5 0.9 0.0 7. AS63473 2406:ef80:4:xxxx:xxxx:xxxx:xxxx:xxxx 70.3% 138 0.6 4.1 0.5 133.7 20.8

Need a free NAT LXC? -> https://microlxc.net/

Comments

Looks fine on my end, I can ping it without any pocket loss.

IPv4 or IPv6?

4

Yep, seems like IPv4 is fine... I don't monitor IPv4 connectivity so I didn't notice.

Need a free NAT LXC? -> https://microlxc.net/

Unfortunatelly IPv6 connectivity between Hosthatch and M247 seems to screw up from time to time.

When I once had a VM in UK I had a similar issue which took weeks to get fixed and on the OGF other reports where shared.

Basically these are good boxes considering the price especially promo offers. But the M247 connectivity and IPv6 issues are probably one of the downsides. I hope they are able to fix the v6 network within a reasonable time.

it-df.net: IT-Service David Froehlich | Individual network and hosting solutions | AS39083 | RIPE LIR services (IPv4, IPv6, ASN)

I wish I could agree, but this isn't limited to M247 - pretty much every upstream we deal with assigns lower priority to IPv6 so it takes a lot of pushing to get them resolved.

ok, good to know it's not just me, at least.

I thought my configuration was wrong.

It's been fine for a few days but the issue's back since yesterday evening:

I sincerely hope that you'll be able to figure out a permanent fix with them and that Tokyo won't become the second location without IPv6 support.

Need a free NAT LXC? -> https://microlxc.net/

Is Sydney still w/o IPv6? I thought it was temporarily.

No, it works fine again but they don't support it:

So if things break again, I doubt they'll bother to fix it.

Need a free NAT LXC? -> https://microlxc.net/

Seems their ipv6 router fe80::1 stopped responding completely.

Retried neighbor discovery (ipv6 to MAC address resolution) 1000 seconds and no reply.

$ sudo ndisc6 -n -1 -r 1000 fe80::1 ens3

Soliciting fe80::1 (fe80::1) on ens3...

Timed out.

Timed out.

...

Their IPv6 prefix has disappeared from the DFZ as well: https://lg.ring.nlnog.net/prefix_detail/lg01/ipv6?q=2406:ef80:4::/48

Welp.

Need a free NAT LXC? -> https://microlxc.net/

Hope threads won’t be closed here because people complain about the provider

Why?

TBF one guy at OGF had an abuse case against them and the other had consumed their bandwidth allocation, and were spamming multiple threads incessantly. The concerning stuff is the loss of IPv6 connectivity here and the data loss incident over at OGF.

As long as we all keep it civil, threads stay open.

https://clients.mrvm.net

Has there been a data leak?

data loss, aka involucration.

Little 1's and 0's laying all over the floor. It's a huge mess.