Differential vs Incremental Backup

I am wondering what would be best for backing up the Windows OS drive and some specific folders?

If I understand correctly, incremental backups would require less storage space and would probably run faster, however I'd need all the incremental backups to be not corrupted and the full backup to restore, correct?

Differential backup on the other hand would take a bit longer, need more disk space, however could restore, even if a previous differential backup was corrupted?

I see that most shared hosting providers seem to use incremental backups. Is this also the preferred way for my use-case, or would you prefer differential?

Differential or Incremental Backup?

- What would you recommend?13 votes

- Differential Backup38.46%

- Incremental Backup61.54%

Comments

Old strategy was a towers of hanoi backup pattern. IIRC that was 3 or so levels of incremental. Monthly full dump, weekly and daily incremental. By making each incremental to the 2nd-most-recent higher level dump instead of the most recent, you could stand failure of any 1 dump and still restore from yesterday. I think these days, serious shops use more automated approaches.

I have the same interpretation.

Incremental, each backup is just the delta from the previous backup. You'll need all the previous deltas until the date you want to recover.

Differential, each backup is a delta from your base image, therefore it includes all the changes since you ran the first backup. You'll just need the last backup file to run a restore.

For my Windows laptop where I run AOMEI, I usually take differential backups. I don't make too many changes to increase the daily backups too much and I create a new base image every quarter. I rather not having to rely on all daily backups on the chain to be working to be able to recover a backup in a incremental backup. If a differential backup gets corrupted I can use the previous version and I only lose 1 day of updates.

Also an interesting approach! Thanks, mate!

Thanks for chiming in, mate! Especiallt, because you also use AOMEI Yeah, I tend to lean towards differential backups. Thanks again!

Yeah, I tend to lean towards differential backups. Thanks again!

Ympker's Shared/Reseller Hosting Comparison Chart, Ympker's VPN LTD Comparison, Uptime.is, Ympker's GitHub.

Just do a full backup if you have space. Is more reliable.

Incremental forever does pose a risk which grows over time, so most people utilize some sort of mix, like incremental for daily, differential for weekly and full for backup. A good backup would also include some sort of verification or even test a restore.

You could google "tower of hanoi rotation" or "grandfather father son rotation" if you want to learn more about backup rotation and scheduling, it should give you a good start.

Thanks for explaining! Yeah, it was really interesting to hear @willie mention the Tower of Hanoi. I will read a bit into it and see what importance I assign to each backup and how I will schedule it

Ympker's Shared/Reseller Hosting Comparison Chart, Ympker's VPN LTD Comparison, Uptime.is, Ympker's GitHub.

I thought it was the other way around, but maybe I'm mistaken.

The now very ancient article The Tao Of Backup (http://taobackup.com/) is still worth reading. It discusses use cases for backups and strategies for testing. The "surprise" ending is that it's a sales pitch for backup software. I don't know if that software is even still available, but the article is worth reading. It is old enough that 20GB is considered a large amount of data, heh.

As a separate thought, it seems to me there are two kinds of files, that I'll call mutable and immutable. Mutable files can change after the file has been created: e.g. you write a document into a file, and then edit it later. Immutable files never change, though they can be deleted.

It also seems to me that most large files (let's say > 10MB) tend to be immutable. The main exception is probably database images where you might have millions of rows that get updated sometimes.

Ideally, backup strategy should take mutable-vs-immutable into account. Unfortunately, our current file systems don't distinguish the two. Maybe file systems should add a way to mark a file as "frozen". Once frozen, it can be copied or deleted, but not changed or "thawed". Frozen files can be permanently associated with e.g. an SHA256 checksum and then the backup system can know where copies of the frozen files are stored.

We are not yet there, though.

@Ympker - Do take a look at https://borgbackup.readthedocs.io/en/stable/ ("Borg", which is what I use for backups on Linux). Borg itself doesn't really work (well enough right now) on Windows. There are other somewhat similar tools like Duplicacy, Duplicati and Restic.

Essentially it is the "chunking" that is giving you a lot of space savings - it "deduplicates" (and compresses) backups resulting in being able to keep many versions with checksum based integrity checks (etc.).

I know there are plenty of folks here who swear by Borg. @vimalware definitely comes to mind. I also use rsync (*nix of course) very heavily on some rather large (and esoteric) file collections and it has never let me down (barring my own stupidity of course). In recent times, I'm now getting more and more dependent on ZFS (and snapshots) and the convenience thereof to simplify some backup management. ZFS though is a more complex beast and recovery should you run into some arcane issues isn't very straightforward (unlike rsync+ext4 which is far more robust+resilient from a partial recovery perspective just in case you run into Mr. Murphy somewhere). Tagging @Falzo (our resident German storage expert) just because I can and I know he'll add some more value here.

You can also take a look at Borgmatic (which is a very convenient wrapper around Borg). I use Borgmatic as well and it makes a lot of things much easier to manage/script/configure.

@willie - I'm sure you'll enjoy reading and getting familiar with Borg in case you aren't already.

Also, @Ympker - specifically to your question on Windows backups, Veeam checks all your requirements (and is also free for personal use). Also look up the Veeam NFR (not-for-resale) key which gives you a LOT more as well just in case you need it.

I'm also sure someone here can and will chime in on PBS (Proxmox Backup Server) which is also very useful to know about though it may not really fit your bill based on your original post.

I just run a full backup, since space/cost isn't a concern. I should store more versions though. But it's not completely necessary.

ExtraVM

I think that @beagle described current usage correctly, but I think that incremental is sometimes understood as 'differential'.

"A single swap file or partition may be up to 128 MB in size. [...] [I]f you need 256 MB of swap, you can create two 128-MB swap partitions." (M. Welsh & L. Kaufman, Running Linux, 2e, 1996, p. 49)

Very interesting! Will read into it! Thanks

Thank you for the thorough feedback, mate. I recently purchased AOMEI Backupper Pro (as was recommended by @beagle ) and was going to use that one for my backups now. The features seem to be decent enough ( https://www.aomeitech.com/ab/professional.html ) and I will try to apply some of the tips & tricks from you guys here. That makes it even more interesting!

That makes it even more interesting!

That's not to say I am not open to hearing about the strategies and backup schemes you guys use

Fair enough! Windows drive is about 150GB right now, I wouldn't want to store too many full backups of that, though. Regarding single folders, that's another story since they are usually smaller in size.

Ympker's Shared/Reseller Hosting Comparison Chart, Ympker's VPN LTD Comparison, Uptime.is, Ympker's GitHub.

I tend to be old-school and still use tar + ftp, but yeah, it's not fancy. I keep telling myself that I need to learn to use one of the fancy tools.

And there's also dump, which can do incremental (i.e., differential) backups, but it's file-system dependent.

"A single swap file or partition may be up to 128 MB in size. [...] [I]f you need 256 MB of swap, you can create two 128-MB swap partitions." (M. Welsh & L. Kaufman, Running Linux, 2e, 1996, p. 49)

As long as it works for you, nothing wrong with that I just wanted a fancy GUI this time around.

I just wanted a fancy GUI this time around.

FWIW, I just wrote a backup script today to download a full FTP backup of my website (on MyW shared hosting) to my VirtualBox in a folder named "dd:mm:yyyy" and then copy it over to my local NAS. My Bash is kinda rusty, though, so it took me like forever. I guess I should see if I can use SFTP instead of FTP with MyW Shared Hosting, too. FTP is just..ugh.

Also not sure, if that's just FTP, but Download Speeds from FTP server are some 500 Kbit/s (according to iftop). Is that normal for FTP? Normally, I would have at least 50 Mbit/s download on that PC; also I am in Germany and @MikePT server is at Hetzner soo.

Ympker's Shared/Reseller Hosting Comparison Chart, Ympker's VPN LTD Comparison, Uptime.is, Ympker's GitHub.

I remember looking into Borg and not pursuing it, but I've forgotten specifics. There was another one (duplicity?) that I tried for a while and found annoying. So now I just use rsync. I will keep trying to figure out alternatives. What I really wish for is affordable tape drives. I do think tape is superior to disk for long term backups.

Doesn't FTP lack CRC/checksum? I use rsync/ssh ... (Or rclone sync.)

Indeed, the FTP standard lacks CRC or checksum, so nothing beyond TCP for ensuring data integrity, as far as the standard is concerned.

There has been a suggestion to extend the FTP standard with a HASH command, but it's experimental and (I guess) is unlikely to be approved at this stage. See https://tools.ietf.org/id/draft-bryan-ftp-hash-03.html

"A single swap file or partition may be up to 128 MB in size. [...] [I]f you need 256 MB of swap, you can create two 128-MB swap partitions." (M. Welsh & L. Kaufman, Running Linux, 2e, 1996, p. 49)

Are you saying that downloading the same file f from machine A to machine B is considerably/radically slower via ftp than via http? There shouldn't be a dramatic/significant difference between the two.

You might try a few different FTP clients to see whether there's a difference.

Otherwise, it may be with how the FTP server is set up, in which case it may be worth a ticket.

"A single swap file or partition may be up to 128 MB in size. [...] [I]f you need 256 MB of swap, you can create two 128-MB swap partitions." (M. Welsh & L. Kaufman, Running Linux, 2e, 1996, p. 49)

Hmm... I don't think it's an issue wiht @MikePT but rather with my virtualbox VM.

When I try to download something via FTP+WinSCP on my Windows Rig, I get up to 6 Mb/s download.

When I try to get something using FTP via my virtualbox ubuntu vm (wget -r ftp://.... ), it doesn't download faster than with about 50 Kbit/s according to iftop. Will have to check on that tomorrow.

Ympker's Shared/Reseller Hosting Comparison Chart, Ympker's VPN LTD Comparison, Uptime.is, Ympker's GitHub.

A fairly controlled test would be to put one rather big file X on your shared hosting site (available via ftp and http), and then to compare

wget ftp://...Xwithwget http://...Xon your VM."A single swap file or partition may be up to 128 MB in size. [...] [I]f you need 256 MB of swap, you can create two 128-MB swap partitions." (M. Welsh & L. Kaufman, Running Linux, 2e, 1996, p. 49)

Good idea, will do that tomorrow! Thanks

Ympker's Shared/Reseller Hosting Comparison Chart, Ympker's VPN LTD Comparison, Uptime.is, Ympker's GitHub.

@angstrom

It appears to be an issue with my Ubuntu Virtualbox.

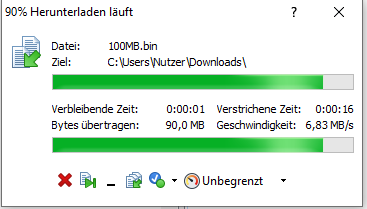

Downloaded a 100MB Test file from my FTP:

Virtualbox - Ubuntu 20.04:

Speedtest :

FTP Download (way slower) :

-2021-12-02 11:09:09-- ftp://nicolas-loew.de/public_html/test/100MB.bin => ‘nicolas-loew.de/public_html/test/100MB.bin’ ==> CWD not required. ==> PASV ... done. ==> RETR 100MB.bin ... done. Length: 104857600 (100M) nicolas-loew.de/public_html/test/100MB.bin 100%[===============================================================================================================>] 100,00M 801KB/s in 2m 17s 2021-12-02 11:11:26 (746 KB/s) - ‘nicolas-loew.de/public_html/test/100MB.bin’ saved [104857600] FINISHED --2021-12-02 11:11:26-- Total wall clock time: 2m 18s Downloaded: 2 files, 100M in 2m 17s (746 KB/s)Windows 10 Rig where Virtualbox is located, downloading with WinSCP:

Was done much faster (in about 16 seconds):

Ympker's Shared/Reseller Hosting Comparison Chart, Ympker's VPN LTD Comparison, Uptime.is, Ympker's GitHub.

Indeed, it looks like an issue with your Ubuntu Virtualbox.

Nevertheless, any chance of downloading the same file X via http for a direct comparison (with ftp)? (

wget http://...X)"A single swap file or partition may be up to 128 MB in size. [...] [I]f you need 256 MB of swap, you can create two 128-MB swap partitions." (M. Welsh & L. Kaufman, Running Linux, 2e, 1996, p. 49)